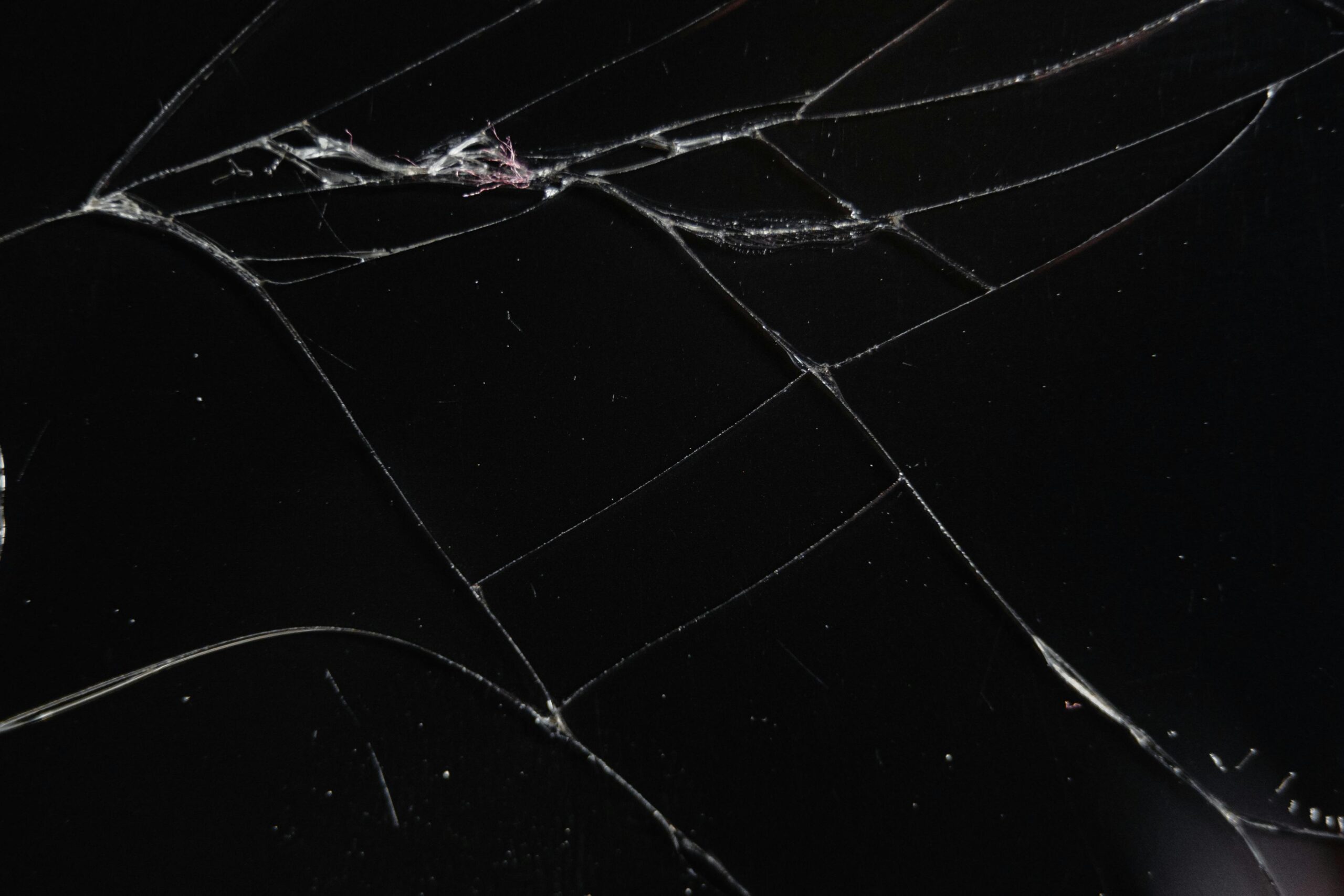

Complex systems surround us—from global markets to ecosystems—yet their hidden fragilities often escape our notice until catastrophic failures occur.

🔍 The Invisible Architecture of Complexity

We live in an era defined by interconnected networks that shape every aspect of modern life. Financial markets, supply chains, digital infrastructure, and ecological systems all operate as complex adaptive systems. These structures exhibit emergent behaviors that cannot be predicted simply by examining their individual components. What makes them particularly challenging is their concealed vulnerability—the unseen fragility that lurks beneath apparently stable surfaces.

The 2008 financial crisis, the COVID-19 pandemic’s impact on global supply chains, and cascading power grid failures demonstrate how quickly stability can transform into chaos. These events share a common characteristic: they exploited hidden weaknesses within complex systems that experts failed to anticipate. Understanding these invisible fault lines has become essential for leaders, organizations, and individuals navigating our interconnected world.

Defining Hidden Fragility in Modern Systems

Hidden fragility refers to vulnerabilities within complex systems that remain undetected during normal operations but trigger disproportionate consequences when stressed. Unlike obvious weaknesses, these fragilities are masked by the system’s apparent robustness during stable periods. They emerge from the intricate relationships between components rather than from individual element failures.

Traditional risk assessment often focuses on known variables and historical patterns. However, complex systems generate novel failure modes through unexpected interactions. A supply chain might function flawlessly for years, yet a single disruption in one region can cascade globally because of tightly coupled dependencies that remained invisible during normal operations.

The Paradox of Optimization

Modern systems are relentlessly optimized for efficiency, cost reduction, and performance. This optimization process, while beneficial during stable conditions, systematically removes redundancy and slack—the very elements that provide resilience during disruptions. Just-in-time manufacturing reduces inventory costs but eliminates buffers that could absorb supply shocks.

This efficiency-fragility trade-off represents one of the fundamental challenges in system design. Organizations maximize short-term performance metrics while unknowingly increasing long-term vulnerability. The hidden fragility accumulates gradually, invisible in quarterly reports and performance dashboards, until a triggering event exposes the underlying brittleness.

🌐 Interconnectedness: The Double-Edged Sword

Network effects have powered extraordinary innovation and growth across industries. Digital platforms connect billions of users, global supply chains enable unprecedented consumer choice, and financial systems facilitate instant capital flows worldwide. Yet this same interconnectedness creates vulnerability pathways that transcend traditional boundaries.

When systems become tightly coupled, failures no longer remain localized. A cybersecurity breach in one organization can compromise entire industries. A drought in one agricultural region affects food prices globally. The complexity of these interdependencies makes it nearly impossible to map all potential failure cascades.

Contagion Mechanisms in Complex Networks

Complex systems exhibit several contagion patterns that amplify initial disturbances. Direct dependencies create obvious transmission paths—when supplier A fails, manufacturer B experiences immediate impacts. More insidious are indirect dependencies, where connections span multiple intermediary nodes, creating vulnerability chains that remain hidden until activated.

Feedback loops further complicate system behavior. Negative feedback typically stabilizes systems, dampening disturbances. Positive feedback amplifies changes, potentially triggering runaway dynamics. Complex systems contain both types simultaneously, with their relative dominance shifting based on system state and external conditions. Predicting which feedback mechanism will prevail during stress represents a significant analytical challenge.

The Illusion of Control and Predictability

Human cognitive biases compound the challenge of managing complex systems. We instinctively seek patterns, construct causal narratives, and assume continuity based on past experience. These tendencies served our ancestors well in simpler environments but mislead us when confronting genuinely complex adaptive systems.

The planning fallacy leads organizations to underestimate implementation timelines and overestimate their control over outcomes. Confirmation bias causes decision-makers to seek information that validates existing strategies while dismissing warning signals. Recency bias gives disproportionate weight to recent stable periods, fostering complacency about potential disruptions.

When Models Fail Us

Mathematical models and simulations have become indispensable tools for understanding complex systems. However, all models simplify reality by necessity. They incorporate assumptions, exclude variables deemed insignificant, and operate within defined boundaries. The hidden fragilities often reside precisely in these simplifications—in the interactions between modeled and unmodeled factors.

The financial models that failed spectacularly in 2008 weren’t mathematically incorrect; they were incomplete. They captured normal market dynamics effectively but failed to account for extreme correlations during stress periods. The models worked until they didn’t, and that transition occurred with devastating speed because the hidden fragilities remained outside the modeling framework.

⚡ Identifying Invisible Vulnerabilities

Detecting hidden fragilities requires approaches that differ fundamentally from traditional risk management. Rather than focusing exclusively on probable scenarios, organizations must consider possible scenarios—even those that seem unlikely based on historical data.

Stress testing represents one valuable methodology. By subjecting systems to extreme conditions in controlled environments, organizations can reveal breaking points and cascading failure modes. However, stress tests face inherent limitations—they can only examine scenarios that designers imagine, potentially missing truly novel failure paths.

Signal Detection in Noisy Environments

Complex systems generate continuous data streams, but distinguishing meaningful warning signals from normal variation presents significant challenges. Early warning indicators exist for many system failures, but they’re often subtle, ambiguous, or buried within vast quantities of routine information.

Effective signal detection requires both quantitative analytics and qualitative judgment. Anomaly detection algorithms can flag statistical deviations, but human experts must interpret whether these anomalies represent genuine threats or benign variations. Building this interpretive capacity requires deep system knowledge combined with awareness of how hidden fragilities manifest.

Building Antifragility into Systems

Nassim Nicholas Taleb introduced the concept of antifragility—systems that gain strength from disorder and volatility rather than merely resisting damage. While robustness aims to withstand shocks unchanged and resilience focuses on recovery after disruption, antifragility represents a qualitatively different property.

Antifragile systems incorporate several design principles. They maintain redundancy and slack resources, enabling flexible responses to unexpected conditions. They exhibit modularity, where components can fail without triggering system-wide collapse. They implement negative feedback mechanisms that prevent small disturbances from amplifying into catastrophic failures.

Strategic Redundancy and Deliberate Inefficiency

Building antifragility often requires accepting deliberate inefficiency—maintaining excess capacity that appears wasteful during normal operations. This conflicts with optimization imperatives that dominate contemporary management thinking. Organizations must balance efficiency gains against resilience requirements, recognizing that maximum efficiency often coincides with maximum fragility.

Strategic redundancy differs from simple duplication. It involves diversifying dependencies, creating alternative pathways, and distributing critical functions across independent modules. A supply chain with multiple suppliers across different regions exhibits redundancy. A technology infrastructure with failover systems and distributed processing demonstrates strategic backup capabilities.

🧭 Navigating Uncertainty with Adaptive Strategies

Fixed strategic plans become liabilities when confronting complex adaptive systems. The pace of change and unpredictability of interactions require organizational agility—the capacity to sense environmental shifts, interpret their implications, and adjust responses rapidly.

Adaptive strategies embrace uncertainty rather than attempting to eliminate it. They employ iterative approaches with rapid feedback cycles, allowing organizations to learn and adjust continuously. Instead of comprehensive long-term plans, adaptive strategies use directional guidance with tactical flexibility.

The Role of Distributed Intelligence

Centralized decision-making struggles to process the information flows generated by complex systems. Distributed intelligence—empowering local actors with decision-making authority within defined parameters—enables faster, more context-sensitive responses. This approach mimics natural systems, where adaptation occurs simultaneously across multiple scales.

Implementing distributed intelligence requires redefining organizational structures and governance models. It demands trust in frontline personnel, investment in their capabilities, and acceptance that some local decisions may prove suboptimal. However, the aggregate benefit—organizational responsiveness that matches environmental complexity—justifies individual imperfections.

Technology as Amplifier and Vulnerability

Digital technologies simultaneously enhance our ability to understand complex systems and introduce new fragility vectors. Advanced analytics, artificial intelligence, and sensor networks provide unprecedented visibility into system dynamics. Yet these same technologies create dependencies, attack surfaces, and failure modes that didn’t exist in analog systems.

Cloud computing enables scalability and flexibility but concentrates critical infrastructure with small numbers of providers. Artificial intelligence enhances decision-making but introduces opacity and potential bias. Internet-connected devices generate valuable data while expanding cybersecurity vulnerabilities. Technology’s dual nature requires careful management of both opportunities and risks.

The Human Element in Automated Systems

As automation increases, human operators transition from routine execution to exception handling. This shift requires different skills—the ability to recognize when automated systems are failing, the judgment to override algorithms when necessary, and the creativity to devise novel responses to unprecedented situations.

However, extensive automation can degrade human skills through disuse. Pilots who rely heavily on autopilot may struggle when manual intervention becomes necessary. Financial traders dependent on algorithmic systems may lose market intuition. Maintaining human competence within automated environments represents an ongoing challenge for complex system management.

🎯 Practical Steps for Organizations and Individuals

Understanding hidden fragility yields little value without translation into actionable practices. Organizations can implement several concrete measures to enhance their navigation of complex systems.

First, conduct regular red team exercises where dedicated teams attempt to identify and exploit system vulnerabilities. These adversarial approaches surface weaknesses that conventional analysis misses. Second, establish early warning systems that monitor leading indicators of system stress rather than waiting for lagging indicators to signal problems already manifesting.

Third, cultivate organizational cultures that reward identification and disclosure of potential problems rather than shooting messengers. Hidden fragilities remain hidden partly because organizational dynamics discourage bad news. Creating psychological safety for raising concerns enables earlier intervention.

Individual Competencies for Complex Environments

Individuals navigating complex systems benefit from developing specific capabilities. Systems thinking—the ability to perceive relationships, feedback loops, and emergent properties—provides foundational understanding. Tolerance for ambiguity enables functioning effectively despite incomplete information and unpredictable outcomes.

Continuous learning becomes essential as systems evolve and generate novel challenges. The skills and knowledge sufficient today may prove inadequate tomorrow. Maintaining intellectual humility—recognizing the limits of one’s understanding—prevents overconfidence that blinds us to emerging risks.

The Ethical Dimensions of System Fragility

Hidden fragilities raise significant ethical questions. When system failures impose costs on those who didn’t participate in creating the vulnerabilities, issues of justice and responsibility emerge. The 2008 financial crisis illustrates this dynamic—complex financial instruments created by sophisticated institutions triggered consequences borne by ordinary citizens globally.

Transparency becomes both a technical and ethical imperative. Stakeholders deserve understanding of the risks they face from complex systems, yet communicating these risks effectively challenges even experts. Simplification aids comprehension but may distort reality. Technical accuracy may overwhelm non-specialist audiences. Balancing these competing demands requires careful judgment.

🌟 Cultivating Wisdom in Complexity

Mastering the unseen fragilities of complex systems ultimately requires wisdom—the integration of knowledge, experience, and judgment applied with humility and ethical awareness. Technical expertise provides necessary but insufficient foundation. Understanding emerges from recognizing patterns across domains, learning from both successes and failures, and maintaining perspective during both calm and crisis.

Complex systems will continue shaping our world with increasing influence. The choice isn’t whether to engage with complexity but how to engage—with eyes open to hidden vulnerabilities, strategies designed for adaptation, and commitment to building systems that serve human flourishing despite inherent unpredictability.

The path forward requires balancing multiple tensions: efficiency versus resilience, optimization versus redundancy, centralization versus distribution, automation versus human judgment. No perfect balance exists—appropriate trade-offs shift with context and evolve over time. Success lies not in eliminating uncertainty but in developing the capabilities, structures, and mindsets to navigate it effectively.

By acknowledging what we cannot fully control, investing in antifragility, maintaining vigilance for warning signals, and fostering cultures that embrace learning and adaptation, we position ourselves to not merely survive complex system dynamics but to thrive within them. The hidden fragilities will never be entirely eliminated, but they can be understood, monitored, and managed—transforming invisible threats into known challenges we’re prepared to address.

Toni Santos is a financial systems analyst and institutional risk investigator specializing in the study of bias-driven market failures, flawed incentive structures, and the behavioral patterns that precipitate economic collapse. Through a forensic and evidence-focused lens, Toni investigates how institutions encode fragility, overconfidence, and blindness into financial architecture — across markets, regulators, and crisis episodes. His work is grounded in a fascination with systems not only as structures, but as carriers of hidden dysfunction. From regulatory blind spots to systemic risk patterns and bias-driven collapse triggers, Toni uncovers the analytical and diagnostic tools through which observers can identify the vulnerabilities institutions fail to see. With a background in behavioral finance and institutional failure analysis, Toni blends case study breakdowns with pattern recognition to reveal how systems were built to ignore risk, amplify errors, and encode catastrophic outcomes. As the analytical voice behind deeptonys.com, Toni curates detailed case studies, systemic breakdowns, and risk interpretations that expose the deep structural ties between incentives, oversight gaps, and financial collapse. His work is a tribute to: The overlooked weaknesses of Regulatory Blind Spots and Failures The hidden mechanisms of Systemic Risk Patterns Across Crises The cognitive distortions of Bias-Driven Collapse Analysis The forensic dissection of Case Study Breakdowns and Lessons Whether you're a risk professional, institutional observer, or curious student of financial fragility, Toni invites you to explore the hidden fractures of market systems — one failure, one pattern, one breakdown at a time.